Amendment to IT Rules, 2021 — Accountability in Social Media Takedown Orders

Context

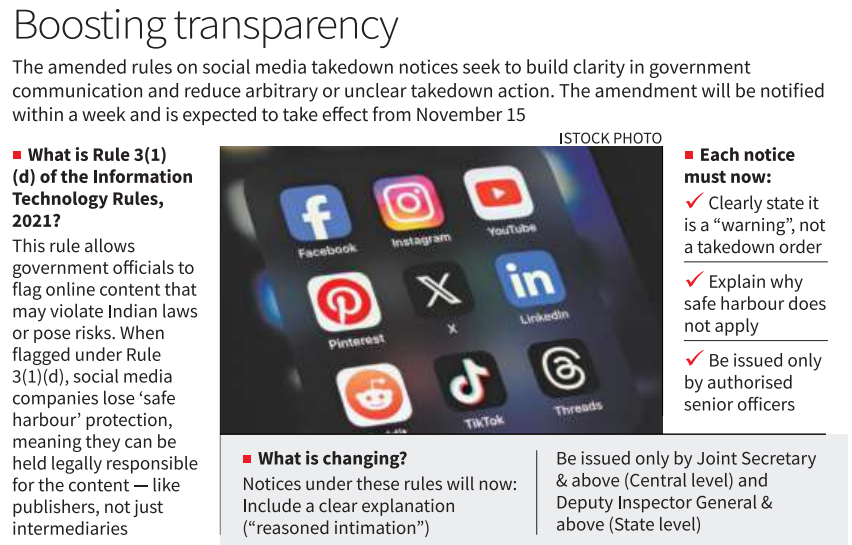

The Union Ministry of Electronics and Information Technology (MeitY) is set to amend the Information Technology (Intermediary Guidelines and Digital Media Ethics Code) Rules, 2021.

The aim is to enhance accountability of government officers issuing takedown or flagging notices to social media platforms.

Key Provisions

Accountability Clause Introduced

Officers issuing content takedown or warning notices must provide a reasoned intimation.

Orders will be issued only by senior officials:

Joint Secretary and above – Central Government.

Deputy Inspector-General (DIG) and above – State level.

Rule Involved:

The amendment pertains to Rule 3(1)(d) of the IT Rules, 2021.

This rule empowers officials to flag content such that platforms lose their “safe harbour” protection (legal immunity for user-generated content).

Clarification Requirement

Notices under Rule 3(1)(d) must clearly state:

They serve as a warning that safe harbour does not apply to specific content.

They are not immediate takedown orders.

Safe Harbour Concept

Under Section 79 of the IT Act, 2000, intermediaries are exempt from liability for third-party content if they act as neutral platforms.

If content is flagged under Rule 3(1)(d), platforms may lose this immunity, making them legally responsible for the content.

Objective of the Amendment

To ensure transparency and procedural fairness in content regulation.

To prevent arbitrary censorship or misuse of takedown powers.

To enhance government accountability in digital governance.

Timeline

Amendment expected to be notified within the week.

To come into effect from November 15, 2025.

Judicial Context

Platform involved: X (formerly Twitter) had challenged Rule 3(1)(d) in the Karnataka High Court, calling it unconstitutional and arbitrary.

Judgment: The court upheld the government’s power to issue such notices.

Official clarification: The new safeguards are not linked to this case but are aimed at improving procedural clarity.

Significance

Promotes responsible governance in digital content regulation.

Strengthens checks and balances between government and tech intermediaries.

Reinforces rule-based digital regulation amid growing misinformation and censorship debates.

Challenges

Maintaining balance between free speech and regulation.

Implementation consistency across States.

Risk of bureaucratic delays or subjective interpretation of “harmful content.”

Way Forward

Establish standard operating procedures (SOPs) for issuing notices.

Integrate transparency reports by platforms on flagged content.

Align amendments with the upcoming Digital India Act framework for holistic governance.