Google’s Project Suncatcher

Context

Datacentres are a rapidly growing share of global electricity consumption.

Artificial Intelligence workloads significantly increase power demand due to:

Dense clusters of GPUs / specialised accelerators

Energy-intensive training and deployment of large language models

Generative AI boom shows no signs of slowing, intensifying concerns over:

Energy availability

Sustainability

Carbon footprint

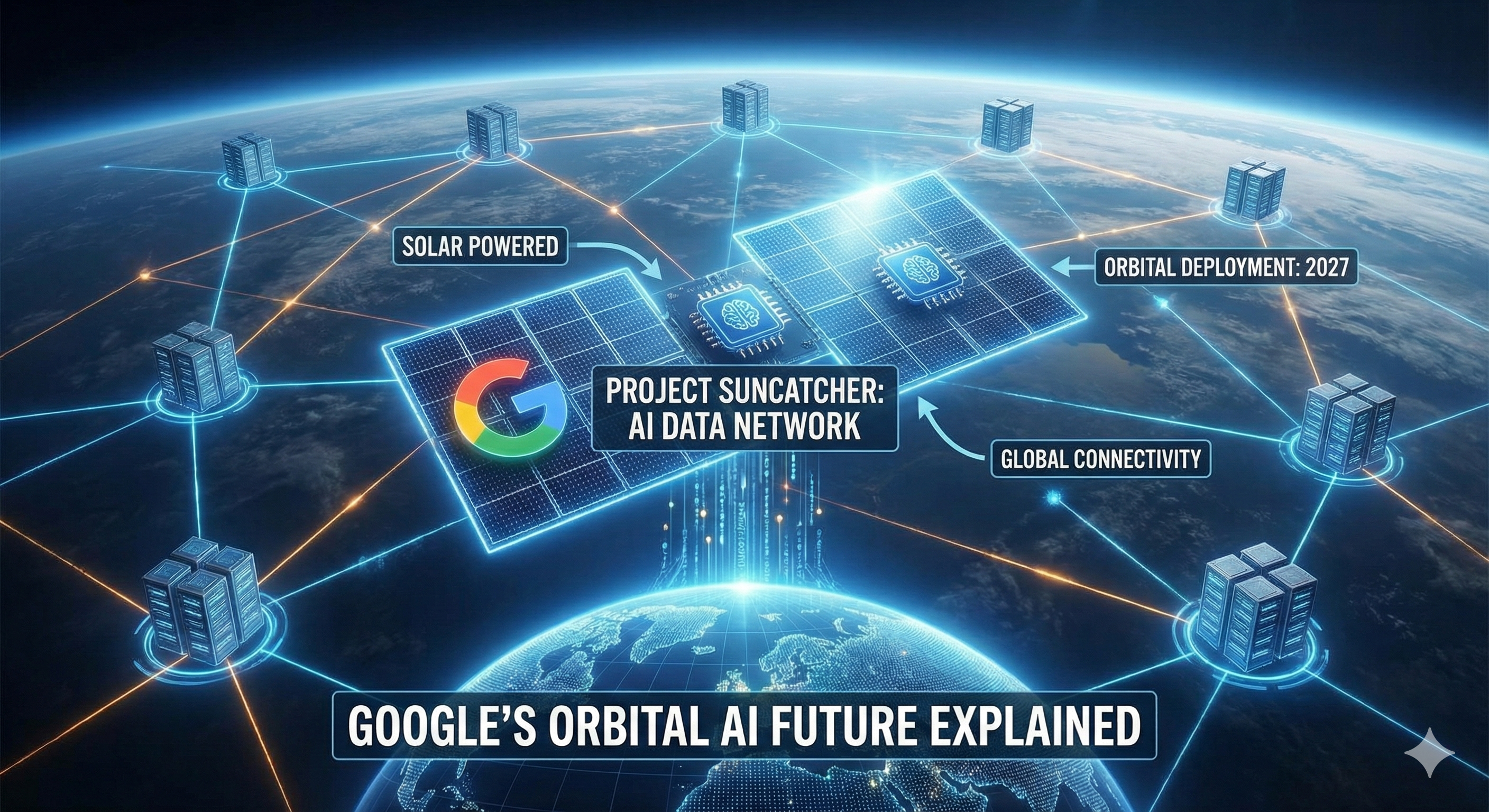

Google’s Project Suncatcher: Core idea

Google Research is exploring space-based datacentres.

Concept:

Place AI datacentres in Low Earth Orbit (LEO).

Power them entirely using solar energy.

Motivation:

Avoid terrestrial energy constraints.

Bypass land, cooling, and grid limitations.

Indian Space Research Organisation is also reportedly studying space-based datacentre technology.

Why AI datacentres are different from traditional datacentres

Traditional datacentres

Demand driven mainly by content consumption (e.g., video streaming).

Bandwidth requirement:

Roughly equal between internal systems and external users.

AI datacentres

Require extremely high internal bandwidth, not user-facing bandwidth.

Large-scale distributed machine learning workloads demand:

High-speed communication within and between nearby datacentres.

Example:

Microsoft’s Fairwater AI datacentre complexes

Use petabit-per-second links between facilities.

Around one million times faster than typical consumer internet.

Networking logic for orbital datacentres

Similar logic applies in space:

Most bandwidth used between satellites, not with Earth.

Ground communication only needed for:

Input queries

Output responses

Like ChatGPT infrastructure:

Massive internal bandwidth

Minimal user-facing bandwidth

Architecture of Project Suncatcher

Inspired by Starlink, but with key differences:

Not a dispersed global swarm.

Densely choreographed satellite clusters.

Key features:

Satellites positioned a few kilometres apart.

Orbit designed to maintain constant line-of-sight with the Sun.

Heavy reliance on:

Inter-satellite links

Multiplexing (packing more data into a single radio beam)

Result:

High computational coordination.

Continuous solar power availability.

Radiation and hardware reliability

Major concern: solar and cosmic radiation damaging chips.

Findings from Google Research:

High Bandwidth Memory (HBM) most radiation-sensitive component.

Irregularities began after:

2 krad(Si) cumulative dose.

Expected five-year shielded dose:

~750 rad(Si).

No hard failures observed up to:

15 krad(Si) on a single chip.

Conclusion:

Trillium TPUs show unexpected radiation hardness for space use.

Key engineering challenges

1. Maintenance

Datacentres require continuous servicing.

In orbit:

No low-cost or rapid access for repairs.

Faulty satellites may need replacement via fresh launches.

2. Thermal management

On Earth:

Liquid cooling is standard.

In space:

Constant solar exposure.

Heat must be dissipated in a vacuum, where convection is impossible.

Requires:

Advanced radiators and thermal control systems.

Economic feasibility: The biggest hurdle

Space-based datacentres must compete with ground-based alternatives.

Costs include:

Research and development

Launching satellite clusters

Replacing failed satellites

Google’s assumptions:

Satellite launch costs may fall to $200 per kg by mid-2030s.

Solar-powered architecture could yield major energy cost savings.

Business viability depends on:

Whether space solutions outpace improvements in terrestrial datacentres.

Lessons from past experiments

Microsoft Natick project:

Experimented with underwater datacentres for cooling.

Ultimately abandoned despite technical promise.

Shows:

Innovative datacentre ideas face economic and operational barriers.

Broader perspective

Technological scepticism often ages poorly:

Starlink’s success was widely doubted in 2019.

Space-based datacentres:

High-risk, high-reward concept.

Could reshape AI infrastructure if technical and economic hurdles are crossed.

Prelims practice MCQs

Q. With reference to AI datacentres, consider the following statements:

AI datacentres require significantly higher internal bandwidth than user-facing bandwidth.

Training large language models relies heavily on dense clusters of GPUs.

AI datacentres consume less electricity than traditional content-driven datacentres.

Which of the statements given above are correct?

A. 1 and 2 only

B. 2 and 3 only

C. 1 and 3 only

D. 1, 2 and 3

Correct answer: A

Explanation:

AI datacentres need very high internal bandwidth for distributed machine learning workloads and depend on dense GPU clusters. Their electricity consumption is higher, not lower, than traditional datacentres.

Q. Google’s Project Suncatcher primarily proposes to:

A. Place underwater datacentres for improved cooling

B. Launch AI datacentres into Low Earth Orbit powered by solar energy

C. Replace GPUs with quantum processors in terrestrial datacentres

D. Use geostationary satellites to host cloud services for consumers

Correct answer: B

Explanation:

Project Suncatcher explores placing AI datacentres in Low Earth Orbit and powering them entirely using solar energy.

Q. Why is downlink bandwidth with Earth considered less critical for space-based AI datacentres?

A. Satellites process data independently without coordination

B. Most data transfer occurs between satellites within the constellation

C. AI workloads do not require real-time data transmission

D. Ground stations provide unlimited bandwidth

Correct answer: B

Explanation:

The architecture relies on intense inter-satellite communication for distributed workloads, while Earth-facing bandwidth is needed only for user queries and responses.